Nvidia CEO Jensen Huang believes physical artificial intelligence will be the next big thing. Emerging robots will take many forms, all powered by AI.

Nvidia has recently touted a future where robots will be everywhere. Intelligent machines will be in the kitchen, the factory, the doctor’s office, and on the highway, just to name a few environments where repetitive tasks will increasingly be performed by intelligent machines. And Jensen’s company will, of course, provide all the AI software and hardware needed to learn and run the necessary AIs.

What is physical AI?

Jensen describes our current stage of AI as pioneering artificial intelligence, creating the foundational models and tools needed to refine them for specific roles. The next phase which is already underway is Enterprise AI, where chatbots and AI models are improving the productivity of enterprise employees, partners and customers. At the peak of this phase, everyone will have a personal AI assistant, or even a collection of AIs to help perform specific tasks.

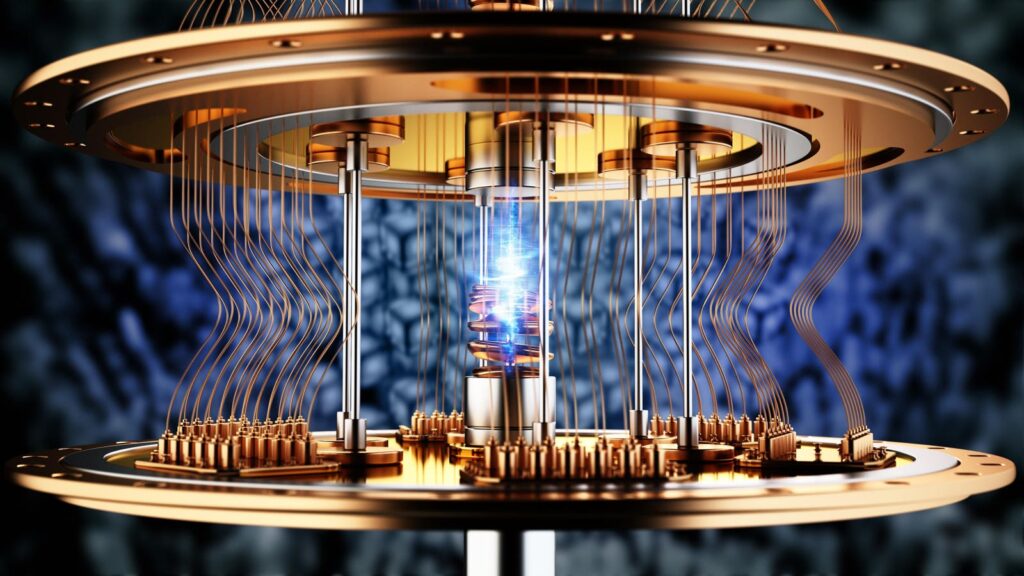

In these two stages, AI tells us things, or tells us things, by generating the next possible word in a sequence of words or signs. But the final third stage, according to Jensen, is physical AI, where the intelligence takes a form and interacts with the world around it. Doing this well requires integrating data from sensors and manipulating items in three spaces.

Jensen presents two robotic friends at GTC’24.

“Building foundational models for general humanoid robots is one of the most exciting problems to solve in AI today,” said Jensen Huang, founder and CEO of NVIDIA. “Possible technologies are coming together for leading roboticists worldwide to take giant steps toward general artificial robotics.”

OK, so you have to design the robot and its brain. Clearly a job for AI. But how do you test the robot against the infinite number of circumstances it might encounter, many of which cannot be predicted or possibly replicated in the physical world? And how will we control it? You guessed it: we’ll use AI to simulate the world the ‘bot will occupy, and a host of gadgets and creatures the bot will interact with.

“We will need three computers… one to create the AI… one to simulate the AI… and one to run the AI,” Jensen said.

The three computer problem

The “three-body problem” of robotics.

Jensen, of course, is talking about Nvidia’s portfolio of hardware and software solutions. The process begins with the Nvidia H100 and B100 servers to create AI, workstations and servers using Nvidia Omniverse with RTX GPUs to simulate and test the AI and its environment, and Nvidia Jetsen (soon with Blackwell GPUs) to provide onboarding real- time sensitivity and control.

Nvidia has also introduced GR00T, which stands for Generalist Robot 00 Technology, to design, understand and imitate movements by observing human actions. GRooT will teach coordination, dexterity and other skills to navigate, adapt and interact with the real world. In his GTC headlineHuang demonstrated several such robots on stage.

Two new AI NIMs will allow roboticists to develop simulation workflows for generative physics AI in NVIDIA Isaac Sim, a reference robotics simulation application built on the NVIDIA Omniverse platform. First, the NIM MimicGen microservice generates synthetic motion data based on teleoperated recorded data using spatial computing devices such as Apple Vision Pro. The Robocasa NIM microservice generates robot tasks and simulation-ready environments in OpenUSD, the universal framework that supports Omniverse for development and collaboration within 3D worlds.

Finally, NVIDIA OSMO is a managed cloud service that allows users to orchestrate and scale complex robotics development flows across distributed computing resources, whether on-premises or in the cloud.

OSMO helps simplify robot training and create simulation workflows, cutting deployment and development cycle times from months to less than a week. Users can visualize and manage a variety of tasks—such as generating synthetic data, training models, performing reinforcement learning, and testing at scale for humanoids, autonomous mobile robots, and industrial manipulators.

So how do you design a robot that can grab objects without crushing or dropping them. Nvidia Isaac Manipulator provides the latest dexterity and AI capabilities for robotic arms, built on a collection of foundational models. Early ecosystem partners include Yaskawa, Universal Robots, a Teradyne company, PickNik Robotics, Solomon, READY Robotics and Franka Robotics.

Ok, how do you train a robot to “see”? The Isaac Perceptor provides multi-camera, 3D surround capabilities that are increasingly being used in autonomous mobile robots in manufacturing and fulfillment operations to improve worker efficiency and safety while reducing error rates and costs. Early adopters include ArcBest, BYD and KION Group as they aim to achieve new levels of autonomy in material handling operations and more.

For operational robots, the new Jetson Thor SoC includes a Blackwell GPU based on a transformer engine that delivers 800 teraflops of 8-bit floating-point AI performance to run multimodal generative AI models like the GR00T. Equipped with a functional security processor, a high-performance CPU array and 100 GB of ethernet bandwidth, it significantly simplifies design and integration efforts.

conclusions

Just when you thought it might be safe to go back in the water, da dum. Yes dum. Yes dum. Here come the robots. Jensen believes that robots will have to take human form because the factories and environments they will operate in were all designed for human operators. It is much more economical to design humanistic robots to redesign the factories and spaces in which they will be used.

Even if it’s just your kitchen.